Boosting

1

2

| import numpy as np

import matplotlib.pyplot as plt

|

1

2

3

| from sklearn import datasets

X, y = datasets.make_moons(n_samples=500, noise=0.3, random_state=666)

|

1

2

3

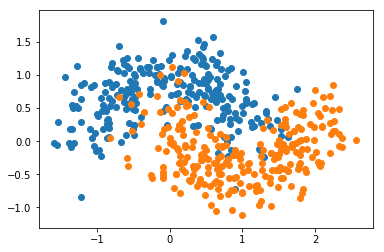

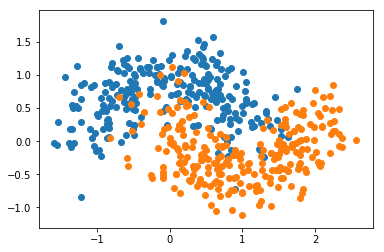

| plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

plt.show()

|

1

2

3

| from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=666)

|

AdaBoosting

1

2

3

4

5

6

| from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import AdaBoostClassifier

ada_clf = AdaBoostClassifier(

DecisionTreeClassifier(max_depth=2), n_estimators=500)

ada_clf.fit(X_train, y_train)

|

AdaBoostClassifier(algorithm='SAMME.R',

base_estimator=DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=2,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False, random_state=None,

splitter='best'),

learning_rate=1.0, n_estimators=500, random_state=None)

1

| ada_clf.score(X_test, y_test)

|

0.85599999999999998

Gradient Boosting

1

2

3

4

| from sklearn.ensemble import GradientBoostingClassifier

gb_clf = GradientBoostingClassifier(max_depth=2, n_estimators=30)

gb_clf.fit(X_train, y_train)

|

GradientBoostingClassifier(criterion='friedman_mse', init=None,

learning_rate=0.1, loss='deviance', max_depth=2,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=30,

presort='auto', random_state=None, subsample=1.0, verbose=0,

warm_start=False)

1

| gb_clf.score(X_test, y_test)

|

0.90400000000000003

Boosting 解决回归问题

1

2

| from sklearn.ensemble import AdaBoostRegressor

from sklearn.ensemble import GradientBoostingRegressor

|